How Mixpanel now runs 50% faster than before

One of the happier problems we have at Mixpanel has been growing with our customers. As they scale up, so does the volume of data they want to analyze in order to build great products, and as those things scale up, so do our infrastructure costs. The sheer volume of data we handle— over 26,000 businesses and over 9 trillion data points per year is enormous. For our customers, the difference between instant insights and eventual insights is monumental.

Making sure those insights come quickly and economically is a large part of my role as a Technical Lead Manager on the Infrastructure team. That’s why we built out a distributed, column-oriented database, Arb, outlined in much more detail here. The scalability of our infrastructure has consistently been a big advantage for us in the sales process and with retaining customers, so maintaining that edge going forward is crucial.

A unique aspect of our data workload compared to most databases is flexibility of both queries and data. Flexible queries means customers can make queries with arbitrarily complex filters, to drill down on billions of events in real-time. That means if a customer wants to look at events filtered down to a very specific cohort of users that they just defined, based on any properties they’ve tracked to Mixpanel, they can. On the data side, because we allow flexible schemas for ease of integration, events can have properties with mixed-type, and sometimes undefined, fields.

We analyzed performance of our query engine on particularly slow queries using Linux Performance Counters, finding that filter performance was very commonly the bottleneck. We did some research on techniques commonly used in other high-scale analytics databases, such as Cloudera’s Impala, Facebook’s Presto, and HP’s Vertica, for some inspiration on solving this problem. We found two major classes of optimizations that we decided to implement in our own database: vectorization and predicate pushdown.

Here, we dive into how we integrated these ideas to make our core query engine more performant for these complicated, flexible queries.

Batching events means better performance

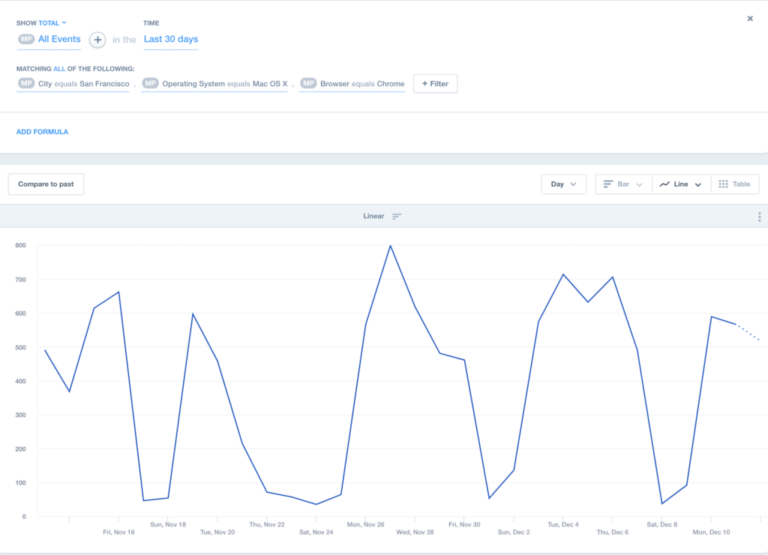

Mixpanel evaluates inputted filters once per event. If you want to filter by city equals San Francisco, operating system is Mac OS X, and browser is Chrome, we will scan every event in the relevant dataset, apply each of these filters to it, and prune away events as they fail to match the relevant criteria, only emitting events the querier were looking for.

A Mixpanel query with filters

While this is fine for small / simple filter expressions, the function calls and branching involved in the tree traversal become a massive overhead for larger customers. Fewer, more complex queries is the more efficient way to query Mixpanel, so it was imperative that our performance be ready to handle every conceivable query our customers throw at us.

To maintain this advantage, we turned to one of the classic optimizations in computer science: batch processing. Rather than reading and filtering a single event at a time, we modified our query engine to operate on batches of events at a time. This allowed us to amortize the expensive reading and filtering over a batch of events.

This improved query throughput by 2x on certain large queries, leading to faster query latencies for our biggest customers. However, it has one limitation: vectorization requires data for all queried properties to have the same data type (they must all be strings or numbers or datetimes). This, we realized, prevented a large amount of queries from being vectorized, since our flexible data ingestion layer allows for variable schemas.

Finding uniformity in messiness

We cannot completely solve the problem of variable data. We can, however, attempt to infer which data type a query actually requires from the filters in the query. By looking at sub-expressions of the filter tree, we could determine that even though a given property may be mixed-type, we can make some guarantees about its type higher up in the filter expression. This is best illustrated by example. Take the following filter:number(event[“video_id”]) == 1234 and event[“video_size”] == “Medium”

Suppose video_id is a mixed-typed property, meaning sometimes is classified as a number and sometimes as a string. However, the filter casts it to a number; this means that the “predicate” number(event[“video_id”]) is guaranteed to be a number. Similarly, suppose that “video_size” is sometimes a number and sometimes a string. However, we know that the equality operator must produce a boolean. Thus, we know that the predicate event [“video_size”] == “Medium” is guaranteed to be a boolean, no matter what the actual type of video_size is. With this, we’re able to turn the filter on properties of mixed-types into a filter on “predicates” of uniform types (like casting or equality). This enables vectorization to kick-in and give us the 2x improvement in throughput.

To accomplish this, we decompose a filter into these predicates and give that information to our event-reading logic. As we read event properties of mixed-types, we use this predicate information to convert those properties into uniformly typed predicates. Because our filters now operate on uniformly typed data, they can be batch processed in the manner described above, and we’re able to achieve the 2x improvement on more types of queries!

Performance is a feature

We’re immensely proud of Mixpanel’s record of reliability and speed and will put it up against anyone else’s. And we’re excited to keep finding the cutting-edge improvements like vectorization and predicate pushdown that will continue to allow us to continue to deliver faster, more cost-efficient experiences for our customers.

In the meantime, the results of these improvements are already paying off and have helped us pass these benefits on to our customers through better pricing models.