Metrics that matter to AdStage

Ad networks like Google and Facebook are just as byzantine as they are powerful. And paid marketers are often at the front lines of making sense of this convoluted environment, expected to manage performance across dozens of ad networks, each of which have their own reporting standards, nomenclature, preferred ad formats, bidding strategies, and real-time demands.

And in an industry where success and failure is measured in one percent increments, getting a holistic picture of how advertising strategies are working across nearly a dozen advertising networks is an imperative, yet onerous, task.

But it’s not a task that’s intimidating for AdStage’s VP of Product Paul Wicker. With a mission to help paid marketers make the most out of every dollar they spend on ad networks, AdStage collates, automates, and visualizes a myriad of data points from Google, Amazon, Facebook, and other ad networks that paid marketers and agencies are expected to manage day in and day out.

In the latest from our Metrics that Matter series, we discover from Wicker:

- The framework AdStage relies on to measure product success.

- How a mission-led product strategy clarifies what features paid marketers need

- How Mixpanel helped upend conventional wisdom about user onboarding.

Mixpanel: What’s your methodology for making and measuring product decisions?

I needed a framework to write product requirements quickly without creating metrics that won’t matter. As a result, I developed this methodology called discover, use, and rely for each new product and feature.

Did the potential user discover the feature? Did they use the feature? And do they rely on that feature as part of their workflow? And the more people you get in the rely category, the more you can count on those users to continue using the product and renewing.

For example, we launched a new report widget that let people display their Facebook and Google ads on an AdStage Report dashboard. But before we built the feature, we established how to measure “discover, use, and rely.” In this case, we looked at:

- Did the user click to open this new widget? (Discover)

- Did they configure settings and hit save? (Use)

- Of the people who created that widget, how many came back and did it again?

(Rely)

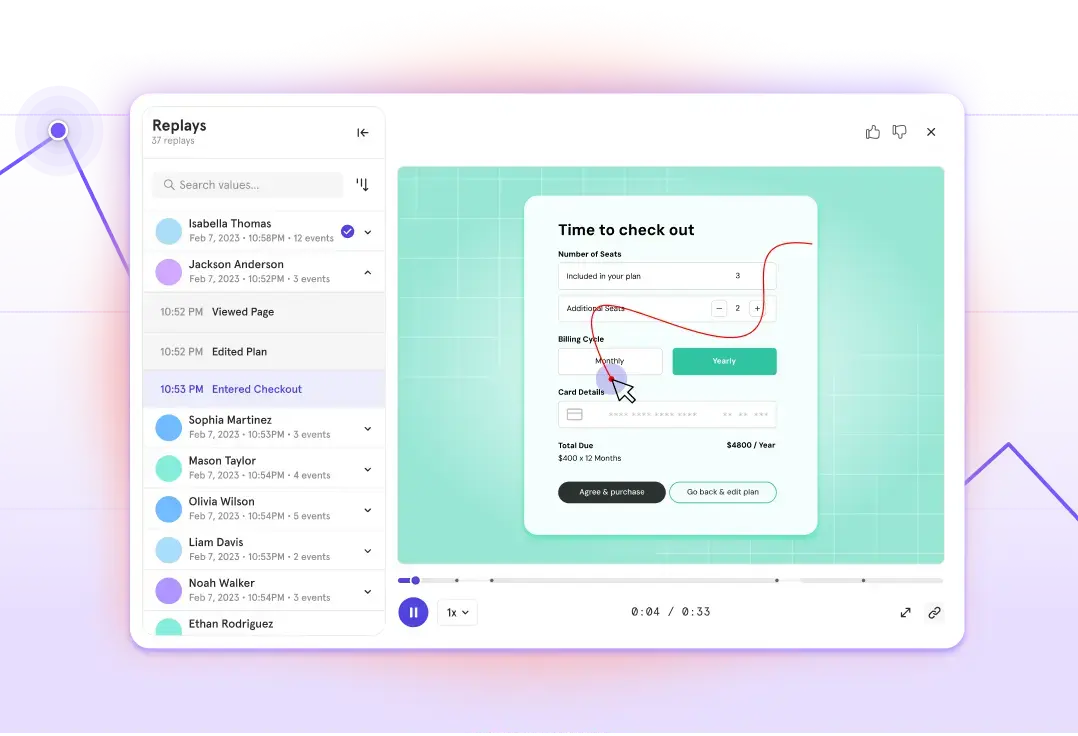

This methodology is also helpful because you can give feedback to different teams that were part of the launch. For instance, product marketing’s job is to help get the user to “discover” the feature. When it comes to “use,” you start to get into UI/UX design: did the user start and successfully finish the task? You can use Funnels in Mixpanel to look at how far a user made it through the creation of a widget.

And then in “rely” you can go to your product and engineering team and say, “Hey, we know the UI team and the product marketing team got the feature in everybody’s hand and they used it–but then they never used it again. Should we change the feature? Did we miss the mark?

Mixpanel: When has Mixpanel shown you a trend that stood out to you?

Digging into the data always teaches me something I didn’t know, and it’s never on the surface. I always see something and think, “Huh, that’s weird.”

For instance, we did have surprising data about our template usage. You would think with a tool that generates dashboards that most new users would start with a template. We were surprised to find that most of our users did not use our templates, even though we tried to push them into our template flow. And then it turned out that the people who did use templates were less likely to use the product and upgrade.

That upended conventional wisdom. I assumed we should start with a template that displays all the features and options. When I looked into it in Mixpanel, the more engaged users were the ones who stumbled their way through the various features on a dashboard —sort of like letting a kid play with a pile of random LEGO blocks rather than handing them a finished LEGO set.

Now, instead of templates, we focus on a tutorial flow that gets people to build with the LEGO pieces themselves. But, without Mixpanel, we would have absolutely invested a bunch of time in building fancier templates and better filters for people to find templates, which is actually worse.

Mixpanel: How do you set product priorities?

Big picture: I start with the mission of the company. The mission of AdStage is to tell a marketer where the next dollar should be spent. We have a handful of product strategies we think will help marketers do that.

From there, I like to think, “Where can we be the absolute best?” And those are the products and features we’re looking to develop. From there we try to disprove the idea; if we can’t we sit down with engineering, sales, customer success, and marketing and plan it out. For example, I mentioned that AdStage users can show the actual ad on their AdStage dashboards. That came about because we saw how many marketers needed to see a visual of their ad in order to understand what ad creatives were working. Previously they were copying-and-pasting images of the ad into their dashboards then adding metrics. Who wants to copy-and-paste all day!? We saw that we could automate that whole process and we did!

Mixpanel: What metrics do customers use to measure AdStage’s success?

Workflow efficiency is one of the key reasons people buy a SaaS tool like ours. Marketers are likely using Excel or Google Sheets and doing a lot of manual tasks that they want to automate. So for our customers, time saved is one of the huge metrics.

One of our products, AdStage Automate, runs rules on the ad networks. Like, pause this Linkedin Sponsored Content if the CTR is bad. In order to communicate to customers how much time we save them, we calculate how many changes our automated tasks make on their behalf. We assume each change might, for instance, take four seconds, so we say to the users, “This week you saved 160 hours because we made 2,000 budget changes and paused 400 campaigns.”