Implementing AI at TymeX: Insights on innovation from Michael Jon Wissekerke

There’s no escaping the push to use AI to drive innovation, and being data-driven is more of a need than a want for any organization seeking to grow.

While most people understand why you would want to use AI and data to drive growth, the challenge is often how. We spoke to Michael Jon Wissekerke, Portfolio Product Manager, Growth, Engagement and Personalization at next-gen bank, TymeX to understand what drove their AI initiatives, how they’re approaching AI, and how they’re managing the risks associated with AI usage.

How did the AI journey at TymeX begin?

As we all know, you wake up and there’s a new headline about some new shiny thing in the AI space and it’s hard to keep track of everything that’s going on. Credit to our leadership team; the seminal moment when OpenAI released ChatGPT in November 2022, they recognized that it was an important moment.

At that point, we shifted our focus to becoming an AI-native and AI-first company. This involved putting together our generative AI capability and platform, an endeavor that has since led to us winning innovation awards in Asia.

From a culture perspective, we’ve focused primarily on how we get these tools to our people. It’s a tricky situation because it depends on who you’re working with. We’ve got people in operations, business, product and engineering; each of these personas require different ways to get the tools and adopt them in the process.

One of the most interesting exercises we did was to run an off-site with our group leadership, and their mandate was to come up with a business case using only ChatGPT. They weren’t allowed to use any other tool. We had people of varying ages, some of whom had never used AI before, so it was quite an eye-opening moment for them.

With many ideas and directions, how do you prioritize what you want AI to solve?

We’re actually beginning to view ourselves more as a technology company more than a bank, so speed of execution is an important factor. A lot of our focal points are on impact, time to value, and identifying inefficient processes. That led us to work on automation use cases to see how we can compress timelines as much as possible.

What are some of the use cases that you’re working on?

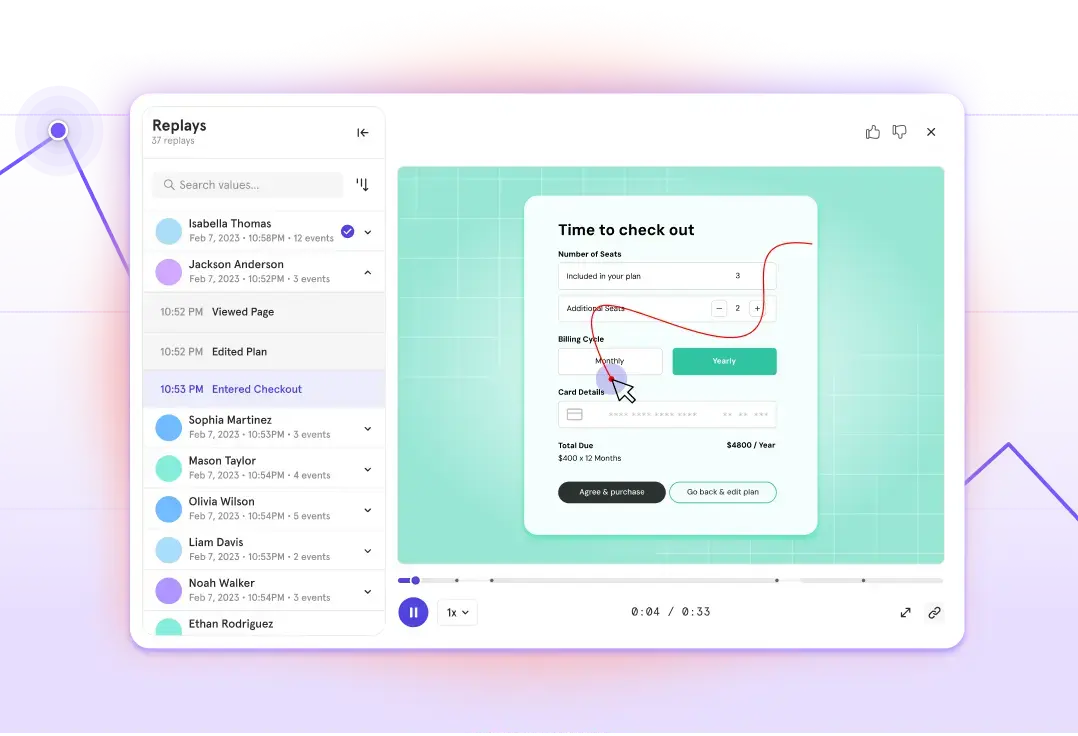

First, we’re focusing on customer service use cases. This involves transcribing calls, mining sentiment from them, and potentially analyzing social media sentiment to identify customer satisfaction trends. We then plan to integrate this information into a Mixpanel dashboard and correlate it with churn.

Another use case is around instrumenting data flows. We use Segment as our customer data platform, and instrumenting and getting those JSON payloads can be quite cumbersome sometimes. So we built a tool that allows us to hook into Figma to potentially upload screens, and from that, we generate the payloads that are required for each of those specific elements. For example, if the screen shows a button, the tool is able to detect what the JSON payload on the untapped event is, or in other cases, it’s able to help classify what’s part of the flow or not.

Using this we’re able to map out our user journey. We’re still testing this out but it’s a great use case that I’m really, really, excited about.

Are you looking into any personalization use cases?

It’s definitely something we’re working on. I’ve just finished our product vision for our personalization portfolio, and as part of that exercise, I was trying to grapple with the idea of how to build something with AI or an LLM at the heart of your product, as opposed to taking a product and just putting an LLM on top of it.

Personalization is a natural fit for that. For me, that means being as explainable and transparent to our users as possible. That’s probably one of the things that gets missed out sometimes as we build systems and they’re black boxes to a certain extent.

Take fraud alerts, for example. As the product team, we need to understand the lineage of the decision-making in determining when there is fraud or not so that we can debug the systems. But we should also be able to surface to the user why that decision was made and what the reasons that led to that decision were.

What are the risks in leveraging AI tools?

These systems have the same issues as any other. So the risks for us as a regulated fintech are always going to be PII-type data that’s fed into these systems and the leaking of that information.

For us at TymeX, at our generative platform layer, we’re fine-tuning open source models so that we have control over that data and it stays secure within our environment. That’s what we’re doing to manage that risk.

We also think about how we check things and ensure quality when using AI tools. To accomplish that, we’ve got our own main generative capability, but we also have third-party LLMs to check the code and output quality. The biggest risk for us is how we evaluate these systems and their output. We need quality output, but we’re new to all of this and we don’t know what we don’t know.

With one of our use cases, the LLM hallucinated a key data piece before it was meant to be sent. The point I’d like to highlight is that you’re not getting away from “human-in-the-loop” sort of models even with AI. You need people to check the final output and make sure it’s fit for the purpose. From a risk perspective, you’ve got to make sure a human performs the final check before exposing the output to internal and external stakeholders.

Where do you think things are going in the next 12 months?

For us, we’re conceptually thinking about how AI will change things and we see a world where people will be using the LLM/AIs as the main interface. This could mean a collapse of the channels over time. For example, you can see search engine traffic is declining year over year and some of it’s going to AI. No one really knows where everything is going but that’s one of the scenarios we’re planning for and I’d recommend others do the same.