What is A/B testing?

A/B testing, or "split-testing," is a method of testing variations of web pages, app interfaces, ads, or emails. Designers or marketers start with a goal of, say, earning more sign-ups and test two versions with similar audiences. If there’s a clear winner, they adopt that version. As you will see, A/B testing is critical for designing products people love.

What are the benefits of A/B testing?

A/B testing injects a healthy dose of reality into your products. It’s all too easy for product designers, developers, and marketers to fall in love with their own creations and A/B testing shows them what users really think. For example, when designing a website, how can you determine the optimal location for each button? Or which graphics maximize sign-ups? Or what version of your navigation bar do users prefer? Only A/B testing can tell for certain. Here are some benefits of A/B testing your digital product:

- Optimize performance

- Reveal hidden trends and user behaviors

- Incorporate user feedback

Testing takes you right to the heart of users’ desires for your product. More often than not, you and your users share a goal: you want to help them solve a problem, enjoy an experience, make a purchase, or get help. By testing and optimizing each stage of their journey, you can speed up their travels and both get more of what they want. Plus, A/B testing is non-invasive and doesn’t disrupt their experience because most of it occurs without their active knowledge. After repeated tests, the product slowly evolves into something that’s intuitive, useful, and which people love.

What can you A/B test?

With the right A/B testing tools, you can test almost every element of your site, app, or message. Here are a few common examples of A/B testing goals for several verticals:

- Ecommerce site: Increase purchases

- Consumer app: Increase time spent in-app

- Online publication: Increase sign-up conversion rates

- Business app: Increase revenue-per-user

- Advertising: Increase clicks and purchases

- Email marketing: Increase opens and click-through rates

And here are a few common site or app elements teams can test to achieve these goals:

- Layout

- Navigation

- Buttons

- Text

- CTA

- Social proof

- Pop-ups

- Announcement bars

- Widgets

- Badges

- Images

- Videos

- Links

Teams can test each of these elements for size, placement, appearance, and even the timing or circumstance under which they appear.

To learn more, download Mixpanel’s Benchmarks Report.

How to perform an A/B test

To anyone who sat through a grade school science class, the A/B testing process will sound familiar: it mirrors the scientific method. Testers develop a hypothesis for how to improve performance and create variants of the text, imagery, videos, or timing. Then they run, analyze, and repeat, implementing results if they’re meaningful.

Step 1: Define your goal

What’s your end goal? Are you interested in conversions, purchases, or usage? Whichever it is, measure your metrics before testing so you have a baseline.

Step 2: Study your existing data

If you’re analyzing a website, do you have high bounce rates? If an app, do you have low usage rates? If email, low click-through rates? Examine your customer journey and where they seem to drop off most often within the conversion funnel. If you find clear leaks before places like forms or CTAs, start your testing there. Consider incorporating data from:

- Product analytics

- Heat maps

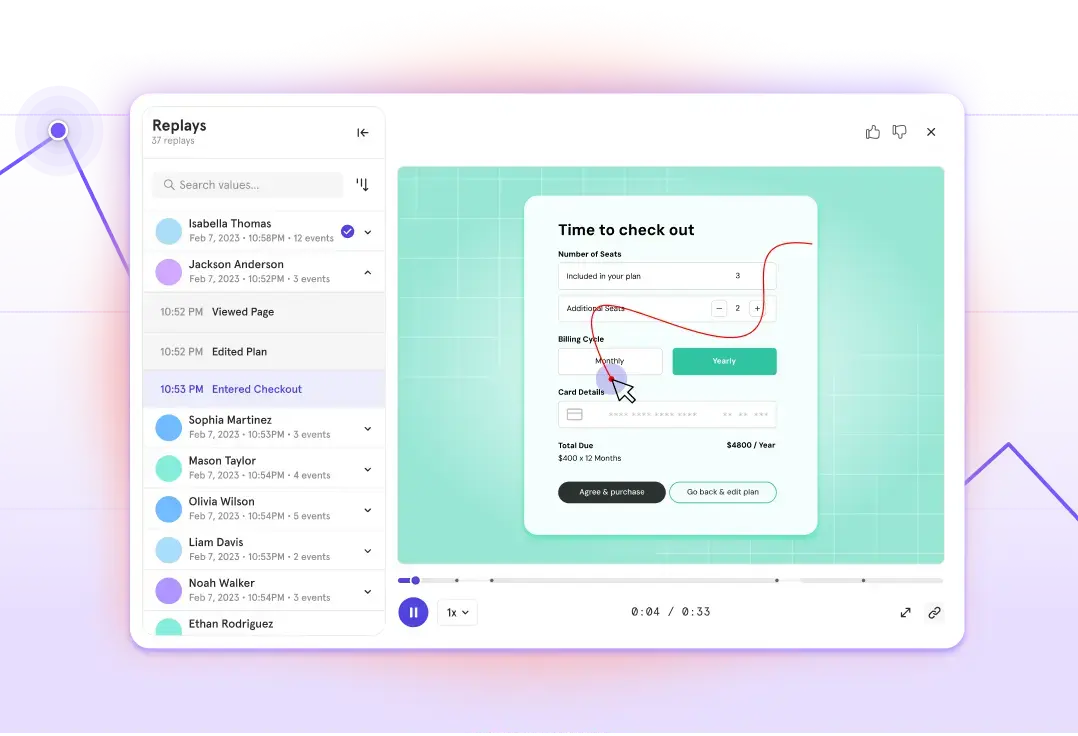

- Visitor recordings

- Form analysis

- Embedded surveys

Step 3: Hypothesize

Before beginning, are there any learnings you can draw upon from past tests? Are there certain features, colors, or placements that users have reacted favorably to before? Use this data to draft a hypothesis for what will improve results. For example, “Making the CTA button more prominent will drive 20% more clicks.” Now, create a variant that reflects your hypothesis. This can be as simple as changing text or as complicated as building a new form, page, or graphic. In the example above, we would build a second CTA button. Remember, never test more than one variable one at a time. If you commit the error of testing two things at once, say, by changing the color and the position of the CTA, you’ll never know which of the two changes influenced your results.

Step 4: Test and analyze

If using a customer analytics platform, upload your variant and run a test. There are two big factors to running empirically accurate tests:

- Sample size: You need a large enough audience that the results actually matter. According to Hubspot, the company’s minimum number for testing emails is 1,000. For websites and apps, it can be much higher.

- Statistical significance: If the difference in performance was slight, and it often is, how do you know that it’s not simply due to a sampling error? You test for statistical significance.

Based on the results and the statistical significance, can you declare a clear winner? If so, implement that change. If not, the control, also known as your “null hypothesis,” always wins, and you don’t implement the change.

Step 5: Repeat

The science cycle never ends. Implement any learnings and continue to test. Each time, return to your conversion funnel and use A/B testing to identify and remove blockages to users’ progress. In doing so, you’ll increase the utility of site or app.

Advanced testing techniques

A/B testing against large populations with simple tests is great, but to hone your product faster with better data, you can deploy:

- Segmented tests provide more actionable data. Tests performed on too large of a population may conceal significant deviances for certain populations. For example, if the average user prefers a 14-point font, this might conceal the fact that users under 10 or over 65 years old find it unreadable. To appeal to them, A/B test with age-based segments and add controls to your product so they can adjust the font size to their liking.

- Multivariate tests pit multiple variants against a single control. That is, instead of an A versus a B, there’s an A versus a B, a C, and so on. Multivariate tests naturally require much larger sample sizes and a product analytics software capable of properly measuring them.

Take the time now to inventory your product marketing tools. Do they permit you to run A/B tests? If so, and there are places in your conversion funnel where users find friction, A/B test away.