A close look at our analytics and metrics for product-led growth

In another blog, we provided a look at how we implemented a product-led growth (PLG) strategy at Mixpanel from a data and analytics perspective. There, we described our PLG setup at a high level and then walked through our architecture in detail. To quickly summarize, we collect marketing, sales, and product data from the relevant systems of record, merge them together in BigQuery using Salesforce account IDs as a common identifier and tag each account with whether it belongs to our ideal customer profile (ICP). In this blog, I’ll pick up where we left off by walking through what we do with all of this joined data in BigQuery.

We want all of our teams to operate on the single source of truth we’ve collected in our data warehouse. In particular, for our PLG strategy to work, all of our teams must be able to do self-serve analysis on:

- How their work is affecting paid conversion

- Quickly and efficiently spotting problems and opportunities in conversion

To make that happen, we wanted all of our activity data related to marketing, sales, product usage, and paid conversion in a single Mixpanel project. Here’s how we did it.

Data structure for our Mixpanel project

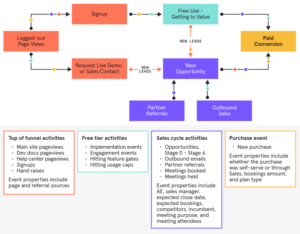

In our PLG implementation post, we showed this diagram for the paths new users can take to become paid customers.

In order to answer questions about how we are doing on converting new users to paid customers, we first needed to map out the important events associated with each block in that diagram. We broke it down like this:

In reality, we have a lot more event properties than what are listed here—not to mention events covering paid account adoption, upgrades, downgrades, and churns—but this should give you a taste of how we structured the events for Mixpanel analysis.

Besides events, you can also sync dimensions to Mixpanel in the form of user profiles and group profiles. To keep things simple in the beginning, we made the unusual decision to use Salesforce account IDs as our “user IDs” in Mixpanel. We did that because all of our events in the diagram above have Salesforce account IDs, whereas some events like sales opportunity stage changes don’t have a single user associated with them, and most of the questions we wanted to answer in Mixpanel related to PLG were at the account level. We have a list of account properties that we track, including:

- The current ARR the accounts is paying

- Various measures of account health and adoption

- Whether the account is part of our ICP

- The sales team the account is assigned to

- Whether the account has a Customer Success Manager assigned to it

- What region the account is based in

Over time, though, we have found that there is more demand for doing analysis at both the individual user level and the account level, so we are planning a switch to using user IDs as the primary key (called a distinct ID in Mixpanel), and having Salesforce accounts, Mixpanel projects, and Mixpanel organizations as group profiles. It turns out that you don’t actually have to have a distinct ID on every event. So long as every event has a key for at least one of the dimensions above, we will be able to do both individual user and account level analysis wherever needed.

Syncing events and profiles to Mixpanel

Next, we needed to transform out data into tables that have the events, profiles, and properties we mapped out. Then we needed to get the transformed data from BigQuery to Mixpanel (AKA reverse ETL). In the early days of building out our Mixpanel project, we used Census for both the transformation piece and the reverse ETL piece. Census is great for this because you can quickly create transformation models with just SQL and then set up a sync to push the transformed data into Mixpanel. This allowed us to iterate on the data in a test project until we got things how we wanted them.

After we had account profiles and enough of the events in Mixpanel to do some useful analysis, we switched over to putting the event and profile transformations in dbt and used Census for the syncs. We still make use of Census models when prototyping new data or syncing lookup tables into Mixpanel, though.

One benefit of Mixpanel’s data model is that profile properties can be enriched as often as needed with new properties and they will automatically be available for the entire event history. A problem we encountered early on, though, was what to do if we wanted to update the events we have in Mixpanel retroactively. For example, we often think of some new event property that we want to add to an event, and then we’d like to have it for the full history of events in Mixpanel. To accomplish this, we added a special version event property to all events. Whenever we update an event, we increment the version property and then resync to the full event table with Census. On the Mixpanel side, we have a Data View that filters all of our events so that only the highest version for any particular event is visible.

When we got this all working, the end result was rather amazing. We found that the combination of quick prototyping, version control on event and profile definitions, and easy syncing from BigQuery to Mixpanel that came from our dbt+Census+Mixpanel workflow was extremely powerful. We could edit or enrich our data on-the-fly but also have all the power of event-based analysis at the same time. And, it turns out that although Mixpanel is built with product analytics in mind, it also works really well out-of-the-box for PLG analysis, as well.

At this point you may be wondering why we bother syncing data to Mixpanel at all if we can transform it as needed with dbt, rather than use a BI tool like Looker or Tableau or newer tools that offer a self-serve analytics UI that queries your data warehouse directly. There are two reasons.

- Unlike BI tools, Mixpanel offers our internal users a self-serve UI that lets them do pretty complex analysis without needing help from the analytics team.

- SQL is inherently slow and inefficient for many common types of ad-hoc analysis, whereas Mixpanel has a custom query engine designed to efficiently query event data to produce funnels and other analyses quickly. That fastness is really important for exploratory analysis where waiting a minute or more for each answer causes you to lose your train of thought.

Next, let’s look at some examples of the types of PLG analysis we can do in our Mixpanel project.

Analyzing our PLG data in Mixpanel

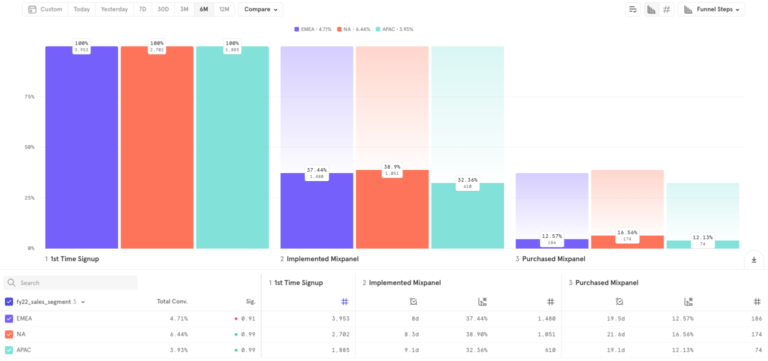

One of our core values at Mixpanel is to #BeOpen, so we’re going to go ahead and show some examples of analysis on our real internal data. First up is a new account funnel going from a signup to implementation (that is, when the account starts sending data to their Mixpanel project) to eventually purchasing a paid Mixpanel plan. We can break this funnel down by region.

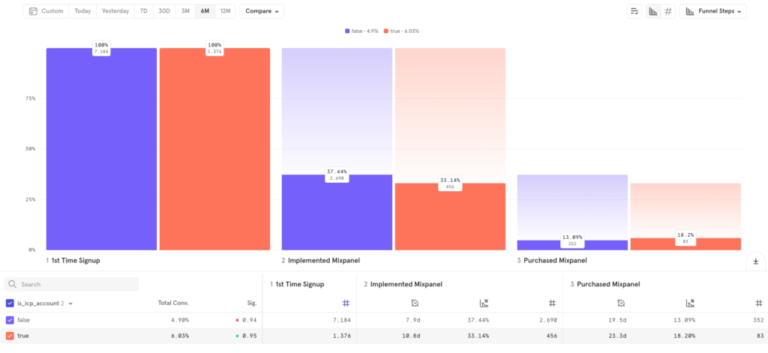

Here we see that North America converts best, followed by EMEA, then APAC. Next, we can break this same funnel down by whether or not the account is on our ICP list. This is important because we are focusing our go-to-market teams on winning ICP accounts. We know from previous research that ICP accounts are much more likely to retain, but are they more likely to purchase in the first place?

The answer is yes, they are. ICP accounts purchase within 90 days 6% of the time, while non-ICP account purchase within 90 days 4.9% of the time. Interestingly, though, non-ICP accounts implement at a higher rate. This finding represents an opportunity for us to improve conversion. We should dig in to where our ICP accounts are having trouble getting started with implementing Mixpanel to drive more paid conversions of these valuable accounts.

Ok, so that was some good news on paid conversion of ICP accounts. But how are we doing on driving the top of funnel? Overall, our signups are up ~50% YoY. But what really matters is whether we’re getting more fresh signups from ICP accounts. Below is the percentage of 1st time account signups that are ICP.

Here we see a dramatic improvement from November of last year to now, going from ~10% of 1st time account signups being ICP to ~17% in the last few months. This improvement happened right around the time that we standardized on our new ICP and our go-to-market teams started focusing on them. So, our focus has paid off. It’s working!

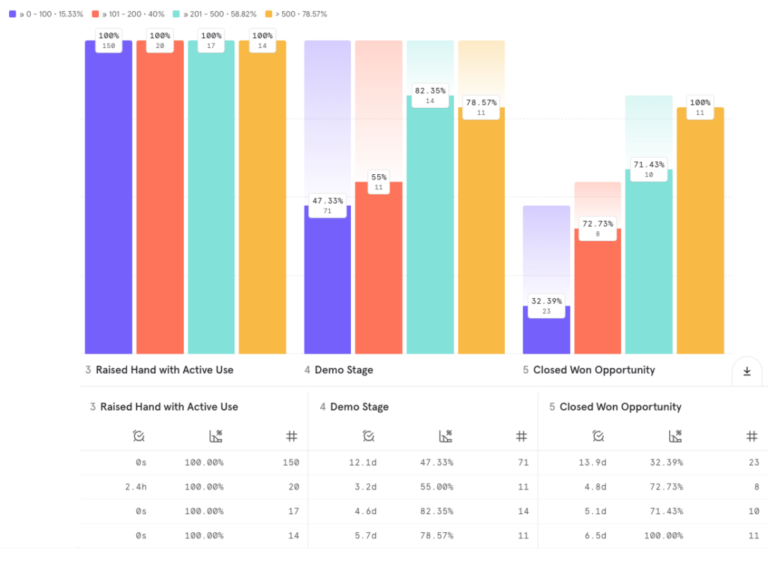

Now, we also want to understand how different levels of product usage influence the sales process. Below is a funnel that begins with new accounts that we get sales contact from, then get a demo (sales opportunity), and then decided to purchase. First, we break this down by the number of weekly active users in the account’s Mixpanel project at the time the sales opportunity is created.

Here we can see that conversion increases at each stage with increasing numbers of active users. So, it seems like a good goal for our product and go-to-market teams to try to get as many active users as possible in our new free-tier accounts. That’s a good ideal, but what we’d really like is a concrete threshold for our teams to aim for. Let’s break the same funnel down by report views and see if that points us towards a good goal.

Here, we see some evidence—at demo stage, at least—that we hit a point of diminishing returns on sales conversion once an account exceeds 200 report views at the time the opportunity is created. So, a good goal is to get as many of our free-tier accounts to 200 report views by the time they request sales contact as possible. And, in fact, this is the threshold we really are using, based on analysis that we did in our internal Mixpanel project.

Hopefully, these examples bring to life just how useful product analytics approaches can be for PLG use cases.