DISCO uses Mixpanel Session Replay to turn What into Why for faster, better UX decisions

Company

DISCO is a B2B music management platform and trusted ecosystem where the largest labels and publishers, sync reps, music supervisors, rights holders, artists and brands organize, search, and securely share massive music catalogs.

The challenge

DISCO’s product team faced a familiar dilemma: their dashboards showed what users were doing, but not why.

User interviews provided rich context, but were slow to schedule across AU–US time zones and naturally biased toward successful workflows. Meanwhile, quantitative data revealed puzzling patterns—such as sudden bursts of ultra-short music streams after searches—without explaining the behavior behind them. Post-release monitoring lacked a scalable way to validate onboarding flows and catch UX friction before it compounded into support tickets or churn.

Why Mixpanel Session Replay

Zero integration friction: Session Replay lives inside the Mixpanel environment DISCO already uses, so the team can filter by existing segments (plan type, catalog size, role) and jump directly into user sessions—no new tools to learn or wire up.

From chart to context in one click: Any spike, drop, or anomaly in a Mixpanel chart becomes a doorway into real user behavior. The team pivots from numbers to video evidence instantly.

Governance built in: Sampling controls and event-based “force record” options keep replays focused on high-value moments—checkout flows, feature launches, billing changes—without overwhelming storage or privacy boundaries.

Solution

Uncovering hidden user workflows

The Search & Discovery team noticed something odd: users would search for music, then stream multiple tracks for just a few seconds each. The metrics showed the pattern, but not the reason.

Session Replay revealed that music supervisors weren’t listening to full songs. Instead, they were scrubbing directly to choruses and verses using waveforms, triggering rapid-fire short plays.

The fix: DISCO embedded waveforms directly into search result rows, eliminating an entire click and dramatically reducing friction. Supervisors could now audition tracks instantly, without disrupting their flow.

“The pattern in the numbers made sense only after we watched sessions. Once we saw the scrubbing behavior, the UI change was obvious—and the workflow became dramatically simpler.”

— Johannes Kresling, Senior Product Manager at Disco.

Catching conversion blockers before they spread

During an early replay review, Johannes watched a user click “Subscribe”—then nothing. The billing form was below the fold on smaller screens, invisible to users trying to complete payment. One session exposed a silent conversion killer that metrics alone would never have flagged.

The team repositioned the credit card fields, restoring visibility and preventing drop-offs across the checkout funnel.

“It was one of the first replays I ever watched, and it immediately showed a user unable to proceed because the billing form was hidden. That one session made the case for adopting Session Replay “, Kresling says.

Making new releases safer

For every beta launch, DISCO now enforces Session Replay on key events for 1–4 weeks. This captures first-time user interactions in full, confirming that onboarding cues, entry points, and feature discovery work as intended—before rolling out broadly.

When error rates spiked on a new “find similar tracks by URL” feature, replays showed users simply retrying and succeeding moments later. It was an intermittent glitch, not a systemic failure—letting engineering prioritize the fix appropriately instead of treating it as an emergency.

Setup tips from Disco

- Right-size sampling: Run ambient replay at ~10% to protect quota; force 100% capture only on critical flows and new features.

- Target high-impact moments: Checkout, billing changes, and brand-new features warrant enforced replays.

- Share across teams: PMs validate UX, designers verify intent, engineers reproduce bugs, and customer success visualizes friction—all from the same clips.

Because our Mixpanel segments are already wired, we filter first, then watch. It saves hours and removes guesswork.Johannes Kresling Senior Product Manager, DISCO

Results

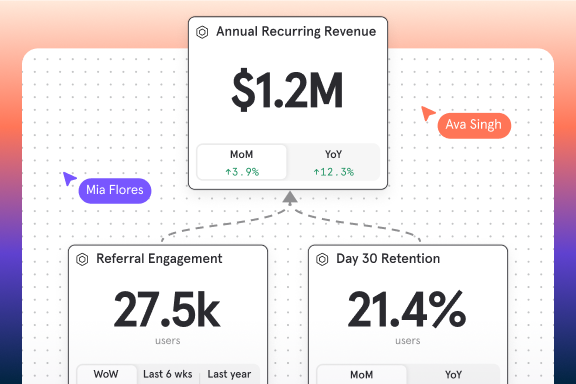

Faster iteration

UX issues that once required days of user interviews are now diagnosed and fixed within hours. DISCO moved from identifying the waveform-scrubbing pattern to shipping a redesigned search UI in a single discovery sprint.

Higher post-release confidence

Enforced replays on new features validate onboarding and intended user paths immediately, reducing reliance on delayed feedback loops.

Smarter incident triage

Teams now distinguish intermittent bugs from systemic problems, prioritizing fixes based on real impact rather than speculation.

Cross-functional alignment

Designers, engineers, PMs, and customer success teams collaborate using the same Mixpanel segments and replay evidence—eliminating opinion-based debates and aligning around observable behavior.

A new product culture

Replay reviews are now embedded in DISCO’s release process. Sprint demos routinely include clips of real user behavior, making empathy and validation a natural part of how the team builds. “Session replay is the quickest way to validate whether users take the path you designed—or the path they actually want”, Kresling says.

Session Replay is the quickest way to validate whether users take the path you designed—or the path they actually want.Johannes Kresling Senior Product Manager, DISCO

What is next

DISCO is standardizing “force replays on new features” across all three product squads, embedding replay reviews into release checklists, and expanding access so every discipline uses the same Mixpanel segments to drive decisions. “Watching a replay can replace hours of back-and-forth. You see exactly what happened, why it happened, and what to fix next”, Kresling concludes.

Best Practices

- Pair charts with replays: Start from a Mixpanel Insight or Funnel, then watch sessions within that segment.

- Instrument force-record events for new features and revenue flows (checkout, upgrades, billing).

- Review onboarding regularly: First-time experiences are uniquely visible through replay—monitor them continuously.

- Keep sampling intentional: 5–15% for ambient coverage; enforce 100% only where needed and time-box it.