User segmentation: A guide to understanding your customers

They say knowledge is power, and perhaps nowhere is this more true than in the world of customer segmentation. The better you know your customers’ shared traits and how those traits relate to when, where, and why they buy your product, and how they use it after they’ve bought it, the better your chances of keeping them as customers by personalizing their experiences. In this comprehensive guide to user segmentation, we’ll define what it is, how you can use it, and review some real-world examples of user segmentation in action.

What is user segmentation?

User segmentation is the practice of bucketing users into groups based on common characteristics (e.g., region, age, device, or behavior). Segmentation allows for better targeting based on user traits or behaviors so that each segment can be treated with a more customer-centric approach (it’s what companies like Viber do to understand users based on their specific behavior).

A full 81% of customers wish companies knew them better, while 94% of marketers wish they knew their customers better. Additionally, 48% of product teams aren’t confident they understand their users and their journeys despite their best efforts. So if there’s a clear awareness of the need for companies to better understand customers, why isn’t it happening? Because learning what users want takes tools, time, and—most importantly—thoughtful segmentation.

Let’s explore how you can use segmentation to build product experiences that users love.

Why segment users?

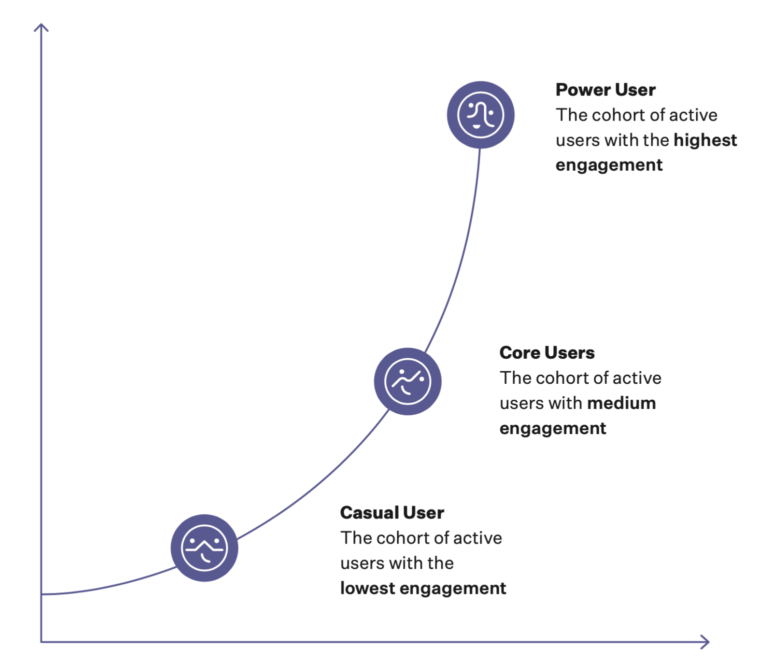

User segmentation helps teams discover what makes valuable power users tick so they can attract more of them.

Without segmentation, product development is a matter of intuition and guesswork and teams can’t analyze whether their efforts paid off. Was a spike in feature usage due to power users or more widespread adoption by all users? The team may have a hunch, but without data broken down for each user segment, it’s hard to know for certain.

A segmentation of customers by usage

When companies learn from their segments, they can personalize their user experience. Customers today expect intuitive and user-friendly interfaces, which means that product teams need to learn as much as they can about users so their product isn’t trying to be everything to everyone.

Just picture a classic newspaper with no segmentation. It’d be a disorganized jumble of culture, sports, and foreign affairs articles. A baseball fan wouldn’t be able to skip to the recap of yesterday’s game and she’d probably abandon the newspaper in favor of one that’s more focused. It’s a similar experience for any site or app. Users want to find the section they love or need, quickly.

It’s only through observation that product teams can know why certain features or pages resonate with users. Impact analysis for different user segments, or analyzing the effects of various experiments and variants on different user segments can help teams speed up their research and isolate what works. User segmentation also allows teams to develop user profiles that list the characteristics, behaviors, and interests of each segment. Profiles allow product teams to develop or improve products with the user in mind.

Here’s a consumer profile example for a fictional fitness app:

Fit Fiona

- Demographics: Female, aged 25-45

- Psychographics: Loves cycling classes

- Behaviors: Uses the app more than 5 times per week

With this information, the fitness app team can use product analytics to create cohorts of users that fit the “fit Fiona” consumer profile so they can check back frequently. Whenever the team thinks about new features, they can think about the cohort, and they can measure how users in the cohort respond to new releases. They can even assign scores and weights to different cohorts to determine their lifetime value and measure the ROI of marketing campaigns.

Examples of important user segments

Based on the traits or data used to bucket users, user segmentation can be divided into the following categories:

- Demographic data: such as gender, age, language, location, marital status, or income

- Psychographic data: about a user’s interests, beliefs, affiliations, or socioeconomic status

- Behavioral data: defines user actions, such as login frequency, time spent in a product, and general level of engagement

- Firmographic data: demographic-like data for businesses (e.g., age, employee count, revenue, industry, location, and business model (B2B or B2C))

- Technographic data: what technologies a company uses, such as CRM, marketing system, or ERP provider

Depending on the data you have on hand, you can segment your users on a multitude of factors:

- Paid vs. free users: Paid users are not only more profitable, but often more advanced and committed, and easier to retain than free users. Segmentation allows teams to focus on retaining the former and converting the latter.

- Usage frequency: Usage frequency is a great way to bucket users on their state of engagement. Users that use the product often find enough value to keep coming back. Surfacing to new users the features or content that frequent users enjoy can help get new users to value faster.

- Time on site/app: Users spending their time on a site or app is usually a good indicator of the value it creates for them. Segmenting users by the time they spend allows teams to learn which features, factors, and content are correlated with higher engagement, so that they can surface new ideas that can increase usage.

- Conversion goals: Segmenting on conversion allows you to find the difference between users that subscribe and those that don’t, or users that purchased items ten times vs those that purchased items only once. This information can ultimately be used to convert more users, faster.

- Negative behaviors: Not all user behaviors are positive — sometimes customers churn, abandon their shopping carts, or downgrade their plan. By segmenting users that complete negative actions and then analyzing the events that preceded these actions, such as a bug encounter or a new feature release, teams can identify causes of friction and improve the overall user experience.

- Lack of activity: A lack of behaviors can also be concerning. Users that skip important actions in a workflow or don’t fully use a valuable feature can be identified with segmentation to understand what causes them to stop engaging, so they can be engaged before they churn.

Any piece of data companies track on their users can be useful in segmentation, so long as those distinctions are meaningful. Customer segmentation should always tie back to a business goal such as engagement and retention.

The sum of each segment’s likes, dislikes, behaviors, and characteristics offer clues to what they want. It’s up to each team to record its users’ desires in a user profile and to make changes to their product’s features, messaging, and marketing to better meet users’ needs.

So how does a team decide how to segment its user base? Let’s explore how a user segmentation platform like Mixpanel can help.

The process of implementing use segmentation

The customer segmentation process consists of four steps:

1. Track user engagement

To segment customers, companies need a way to track product usage for each user. But getting a grasp on your entire audience isn’t always easy. Most sites and apps aren’t built to analyze themselves and it can be difficult to track individual users and the journeys they follow through the app, much less compare two different user segments.

A media site, for example, might be able to use basic web analytics to determine how many users merely visit its publication versus how many stay to click an ad. But which users are which? What user flow do they typically take? How do you track and target only the users who subscribed but never returned? To draw meaningful distinctions, the media site needs a way to separate visitors not just by their characteristics but also by their behaviors.

A product analytics tool is often the answer. Mixpanel, for example, integrates into a site, an app, or both, to pull usage data that the site or app was never designed to capture. It offers a user-friendly interface with which teams can create reports, break down data, track funnels, and define user segments, all in one platform.

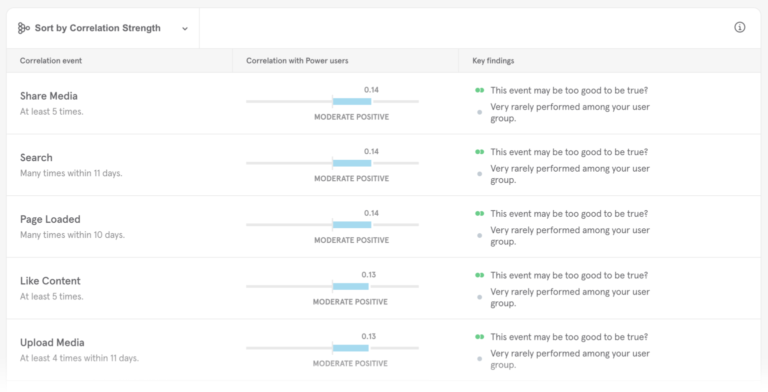

An Insights report in Mixpanel that shows correlation between certain events and high engagement

2. Identify segments based on business priorities

As mentioned, segments are useful only if they’re tied back to business priorities (such as engagement). If teams know what they want their users to do, they already know their first few segments.

If the goal is for users to make a purchase, one segment could be for users who have purchased. Another could be for users who haven’t. A third could be for those who have purchased repeatedly. If the goal is to increase app usage, teams could segment by time spent in-app to isolate their power users from the sea of occasional visitors.

Here are metrics teams might use to define segments:

- Engagement / usage

- Acquisition source

- Customer lifetime value (CLV)

- Retention

- Churn

- Average visits per user

- Conversions

- Important user milestones

Product analytics platforms allow teams to create segments more quickly. Mixpanel, for example, tracks interactions that occur on a site or within an app, and highlights the most frequently occurring events so teams can figure out metrics that they should track.

3. Use analytics to generate reports

With a product analytics tool, teams can create, save, and share reports for different user segments to see how each segment affects key metrics. Teams might generate reports to:

- Compare usage for paid and free users

- Measure the retention rate for users acquired from social media

- Determine which actions taken during a free trial make users more likely to purchase

- See whether usage increased after a new feature release

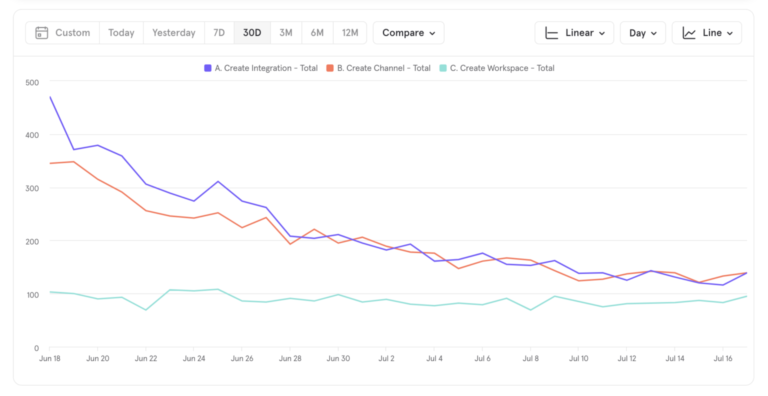

A Mixpanel Insights report that shows the channel and integration features aren’t being used as often as before

4. Make changes based on segments

By comparing segments to each other or the entire user population, teams can identify meaningful differences that can help them identify roadmap changes to improve their product.

For a mobile fitness app, for example, a team might find that its most valuable users finish setting up their profile more often than the average user. The team can then change its onboarding flow to make sure that every new user completes their profile.

An enterprise financial services app might find that users that came from sales-generated leads have a greater lifetime value than self-provisioned ones, which can push them to focus marketing dollars on sales enablement. Or, a social media app might find that users that download their mobile app are twice as sticky, and promote their app on their web experience to drive more mobile users. Ultimately, segmentation leads to insights which often allow teams to build better products.

User segmentation case studies

Here are three real-world examples of user segmentation in action.

1. Ticketmaster

Ticketmaster, the world’s leading ticketing company and one of the world’s largest eCommerce sites, used Mixpanel for deep user segmentation insights around its B2B digital marketing application, FanBuilder. The FanBuilder team Leveraged Mixpanel to better understand engagement by user type and company in order to help them grow the most profitable venues, artists, and promoters.

2. Deliveroo

Deliveroo, a leading online food delivery company operating in 500+ cities, uses Mixpanel to test hypotheses that boost engagement and decrease churn. Deliveroo operates three different groups based on user segments: Restaurants (i.e., delivery), Rider, and Consumer. Deliveroo’s Restaurants group is a B2B segment within a B2C company, focusing on the business entity, not necessarily the user. With Mixpanel, Delivroo has been able to analyze user segment data according to the restaurant chain (ie, the entity), as opposed to analyzing the data according to the user, which could cloud the data (and results) because there can be multiple users per restaurant. The company has started using this feature to perform even higher-level grouping, such as the performance of restaurants under a brand umbrella.

3. Rakuten Viber

Viber is a cross-platform instant messaging and voice over IP (VoIP) application operated by the Japanese international company, Rakuten. VIber leveraged Mixpanel to better understand what makes Viber more fun for its users and to analyze key KPIs such as average session length according to user segments, allowing the company to move the needle of its most important business drivers: increasing engagement and improving retention.

Ready to start segmenting your users?

If personalization is key to long-term product success, then segmentation is one of the best tools for giving groups of users what they need. There’s never been a better time than now to ask: who are my users, what do they want, and how can I give it to them?

Gain insights into how best to convert, engage, and retain your users with Mixpanel’s powerful product analytics.